AI-generated image of a mammoth in Norway.

The crisis of trust in digital content: GenAI and Content Authenticity

It’s becoming harder to know what’s real on the internet, in your social media feeds, on the news. A convincing photo, a video of a public figure saying something controversial, or a seemingly credible news clip - any of these could be entirely constructed, generated by artificial intelligence or manipulated to mislead. This kind of fake content has become part of our lives and the consequences of this are already unfolding, from intricate scams to interference with election results.

Fake content has always been around, but the emergence of generative AI has caused the problem to grow exponentially, and the trust we once placed in what we see with our own eyes is facing a serious crisis.

Let’s explore how deepfakes and AI-generated media are fueling misinformation, the growing threats to trust in digital content and the news, and why we need new methods and tools to verify the authenticity of what we see online.

The rise of AI-generated content in media

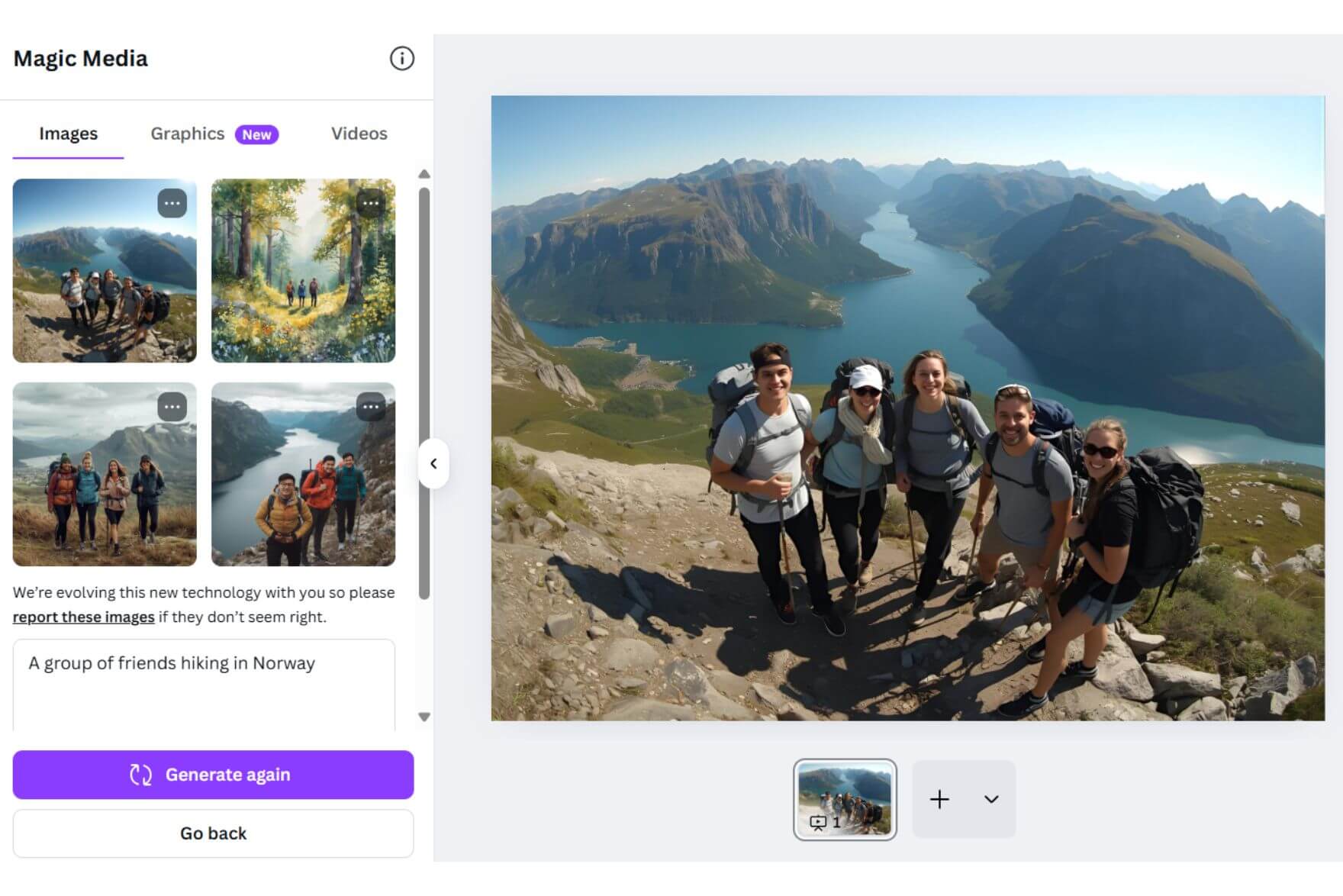

Generative AI has transformed how digital content is created. Tools like ChatGPT, Midjourney, Sora, and Adobe Firefly can now generate highly realistic images, videos, voices, and text within seconds. This opens up new creative possibilities in marketing, media, and education, helping to produce content that is helpful, educational, and leaves a positive impact on society.

However, the same technology can be misused. GenAI also lowers the barrier for producing content that isn’t meant to have a positive impact but to intentionally mislead, discredit, or cause harm.

It can be used to fabricate realistic but entirely false content: images of news events that never happened, fake voice messages from public figures, or manipulated videos that appear genuine to the untrained eye. Unlike traditional image or video editing, these AI-generated outputs can be created at scale, with minimal effort and no specialized knowledge. As a result, fake media is flooding social feeds, messaging apps, and even news platforms, usually with no labels to indicate its origins or that it’s artificial.

Generative AI is becoming more and more sophisticated and distinguishing between authentic content and synthetic content will become harder and harder. For businesses, media outlets, and audiences alike, this evolution raises urgent questions about credibility, responsibility, and the future of content trust.

Deepfakes: How they’re made and used

Deepfakes are among the most widely recognized forms of synthetic content. They’re created using machine learning models - often generative adversarial networks (GANs) - to manipulate images, video, audio, or other media manipulation, replacing someone’s face or voice with another’s. A deepfake can show someone appearing to say or do something they never did, and the results are increasingly difficult to identify as such.

Research shows that humans detect deepfake images with just 62% accuracy, barely better than chance. For deepfake videos, accuracy can dip as low as 23%. (Bray, Johnson, & Kleinberg, 2022).

Originally developed as a novelty, deepfakes are now often being used for harmful purposes, turning into a full deepfake crisis. They’ve been used to spread political disinformation, discredit public figures, or conduct fraud. A growing concern is the use of deepfakes in phishing and impersonation scams, where voice and video are used to trick individuals into transferring money or sharing sensitive data (Jumio Online Identity Study, 2025).

Popular deepfake examples

News events

In May 2023, an AI-generated image of an explosion outside the Pentagon went viral causing public alarm, and U.S. stocks plummeted briefly. The Department of Defense quickly confirmed the image was fake, and emergency services reassured the public (Los Angeles Times), but the incident highlights how deepfakes can spread dangerous misinformation with real-world impact, e.g. on the stock market.

Corporate

In 2024, high-profile deepfake scams targeted major European companies using AI voice cloning and impersonation techniques. In May, scammers created a fake WhatsApp account and used AI-generated voice of Mark Read, CEO of WPP (UK), during a Microsoft Teams meeting to solicit money and sensitive information. The fraud was stopped thanks to alert staff members (The Guardian).

Similarly, in July, criminals attempted to deceive Ferrari executives by cloning CEO Benedetto Vigna’s distinctive southern Italian accent via WhatsApp to discuss a supposed confidential acquisition. The scam failed when an executive asked a verification question only the real CEO could answer (Fortune).

Celebrities

A case reported by the BBC in early 2025, became a high-profile example of how deepfakes can exploit emotional trust and cause serious financial and psychological harm: A French woman lost €830,000 after being deceived into a fake online relationship with a deepfake version of Brad Pitt. Scammers used AI-generated videos and images to impersonate the actor over more than a year, convincing her to transfer large sums of money for fabricated reasons like medical bills and legal troubles.

In March 2023, an image of Pope Francis wearing a white Balenciaga-style puffer jacket went viral on social media. The picture, generated with the AI tool Midjourney, fooled many users into thinking it was authentic before fact-checkers confirmed it was fake (CBS News). The “Balenciaga Pope” quickly became a global meme, but it also highlighted how easily AI-generated images can blur the line between humor, fashion commentary, and misinformation.

These examples illustrate how deepfakes and synthetic media are no longer isolated incidents but part of a growing problem with real-world consequences. Although many fake images or fake videos get identified quickly, content is often shared widely, reaching millions before the fakes are exposed. The damage to public trust is done.

The decline of trust in digital content

The growing number of incidents of fake news involving AI-generated images or videos illustrates a troubling trend: the steady erosion of public trust in digital content.

According to the 2025 Edelman Trust Barometer, 70% of respondents worry that journalists and reporters purposely mislead people.

Misinformation and disinformation were also named among the major risks for the upcoming two years by the World Economic Forum Global Risks Report 2025, highlighting AI-generated content as a driver for declining trust in institutions.

The Reuters Institute Digital News Report 2025 also found 58% of their respondents worrying about the authenticity of content on the news - a concerning trend in societies that rely on an informed public.

The result is a crisis of content trust. It’s no longer enough for content to look or sound authentic, audiences increasingly question whether what they see is real at all. News organizations, social media platforms, and public institutions are under increasing pressure to verify content. But the speed and scale of AI misinformation outpaces manual verification. Confidence in news, organizations, institutions, and even peer-to-peer communication is declining.

To address this crisis of confidence, we need to rebuild the conditions that made trust possible in the first place, for example by improving media literacy. But we also need to create systems where the authenticity of content is verifiable at the point of publication and across its digital journey.

The need for authenticity and traceability

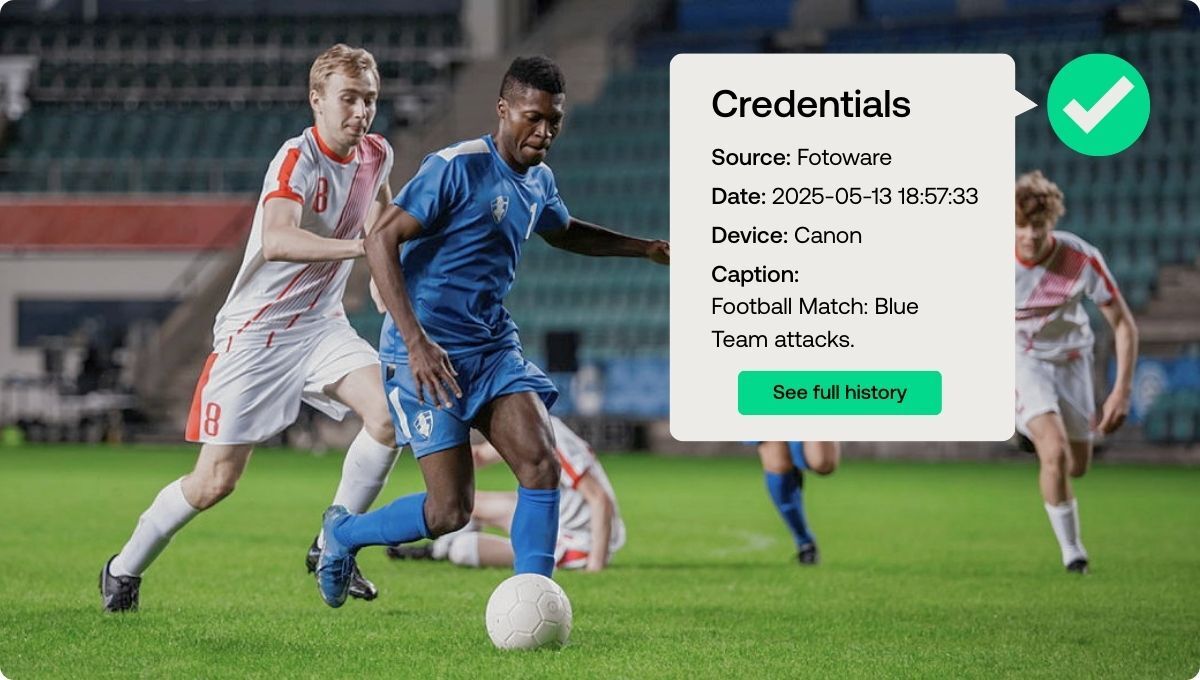

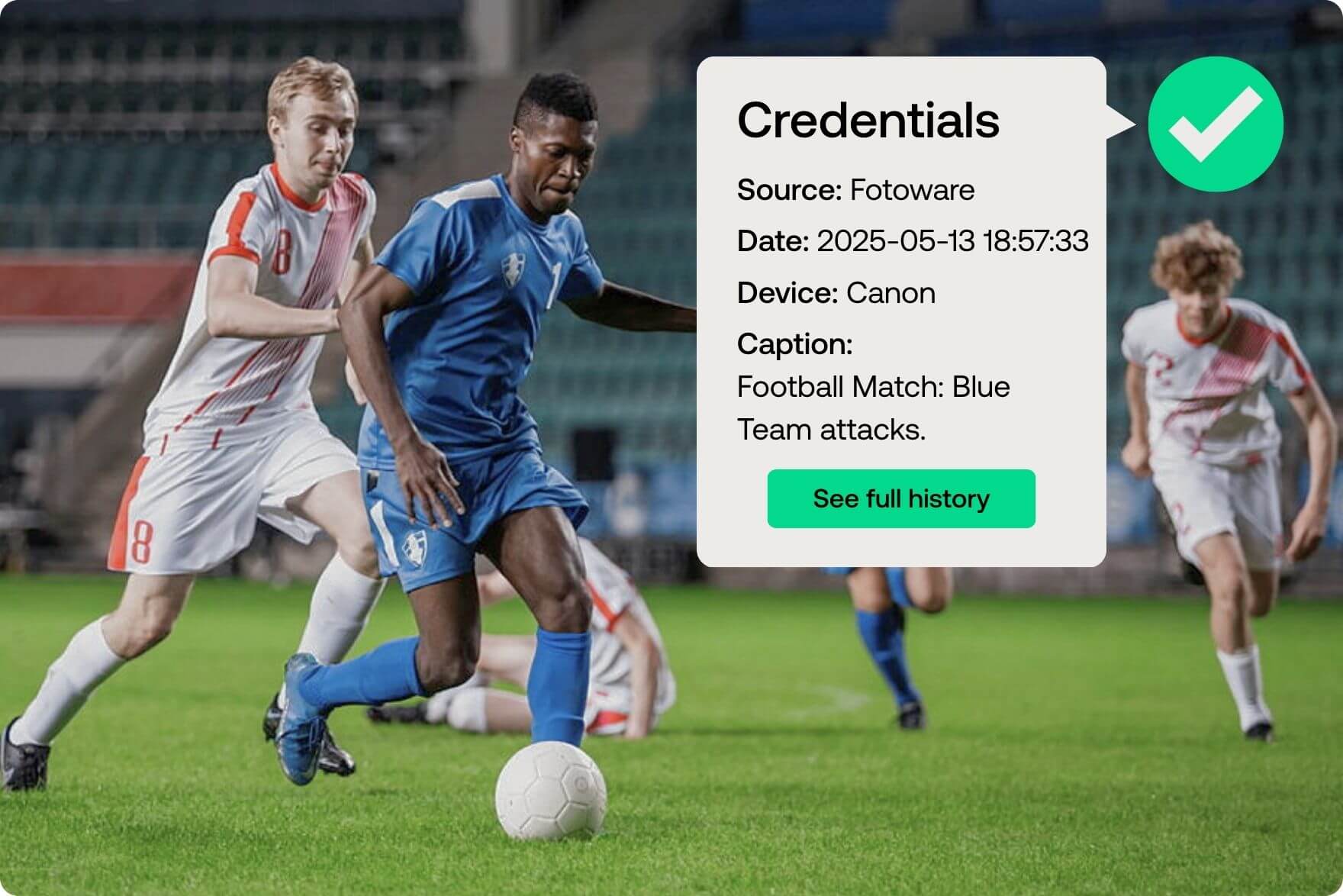

Digital content needs to be verifiable, so Content Authenticity and traceability are becoming essential concepts in a media environment. Viewers should be able to trace the origin of an image or video, see how it has been edited, and confirm who created it, to judge with their own eyes if this content is fake or genuine.

Technologies and standards are emerging to meet this need. The Coalition for Content Provenance and Authenticity (C2PA) is developing open standards for embedding tamper-evident metadata in digital content. These Content Credentials can show where a piece of media originated, what tools were used to edit it, and whether AI was involved.

Digital Asset Management (DAM) systems also play a critical role in maintaining content provenance within organizations. Thanks to the options of reliable metadata management and managing content lifecycles, a DAM can help ensure that what is published is accurate, traceable, and compliant.

Just as HTTPS became the default protocol for verifying websites, similar authentication layers for digital content may soon become standard. This shift won’t happen overnight, but it’s already gaining traction across major tech platforms, media organizations, and the photography industry.

— “The C2PA standard is going to be as important for Content Authenticity as XMP has been for metadata. Just as XMP gave us a common framework for describing and managing digital assets, C2PA is becoming the foundation for establishing trust in content. It provides a shared language that makes authenticity verifiable, helping us understand where an image comes from and how it has been altered along the way.”

In the end, rebuilding trust in digital content requires a combination of technology, transparency, and education. As AI-generated content increasingly becomes part of our daily lives, verifying authenticity of content will be just as important as creating compelling stories or visuals.

Read more: Content verification - A project for photo authenticity in journalism

Conclusion

The rise of deepfakes and generative AI marks a turning point in how we perceive and interact with digital content. While these technologies bring innovation and efficiency, they also introduce new risks to information integrity and public trust in digital content.

— “Technology vendors must be proactive in adopting emerging technologies to help us identify authentic content. If we don’t support the full content lifecycle, it won’t work.”

Protecting trust around digital assets and helping audiences distinguish between real and fake content will require a broad effort: Organizations, journalists, content creators, and also technology vendors must strengthen their verification practices, adapt tools to validate content, and improve media literacy.

Above all, building trust in content will depend on collaboration across industries as Content Authenticity is becoming a critical part of protecting the truth and maintaining trust in the digital age.

Is your organization ready for generative AI?

This free checklist helps you assess your organization's readiness and take the right steps toward responsible and secure AI adoption for visual content.