Content Authenticity: How to protect trust in the digital age

In a digital world where misinformation, deepfakes, and AI-generated content spread quickly, the question of what is real has become increasingly complex. This is where Content Authenticity plays a critical role. It refers to the ability to verify the origin, integrity, and history of digital content, whether it's an image, video, or document.

This article provides an in-depth look at what Content Authenticity means, why it matters more than ever, and how organizations and individuals can protect the integrity of their digital content.

What is Content Authenticity?

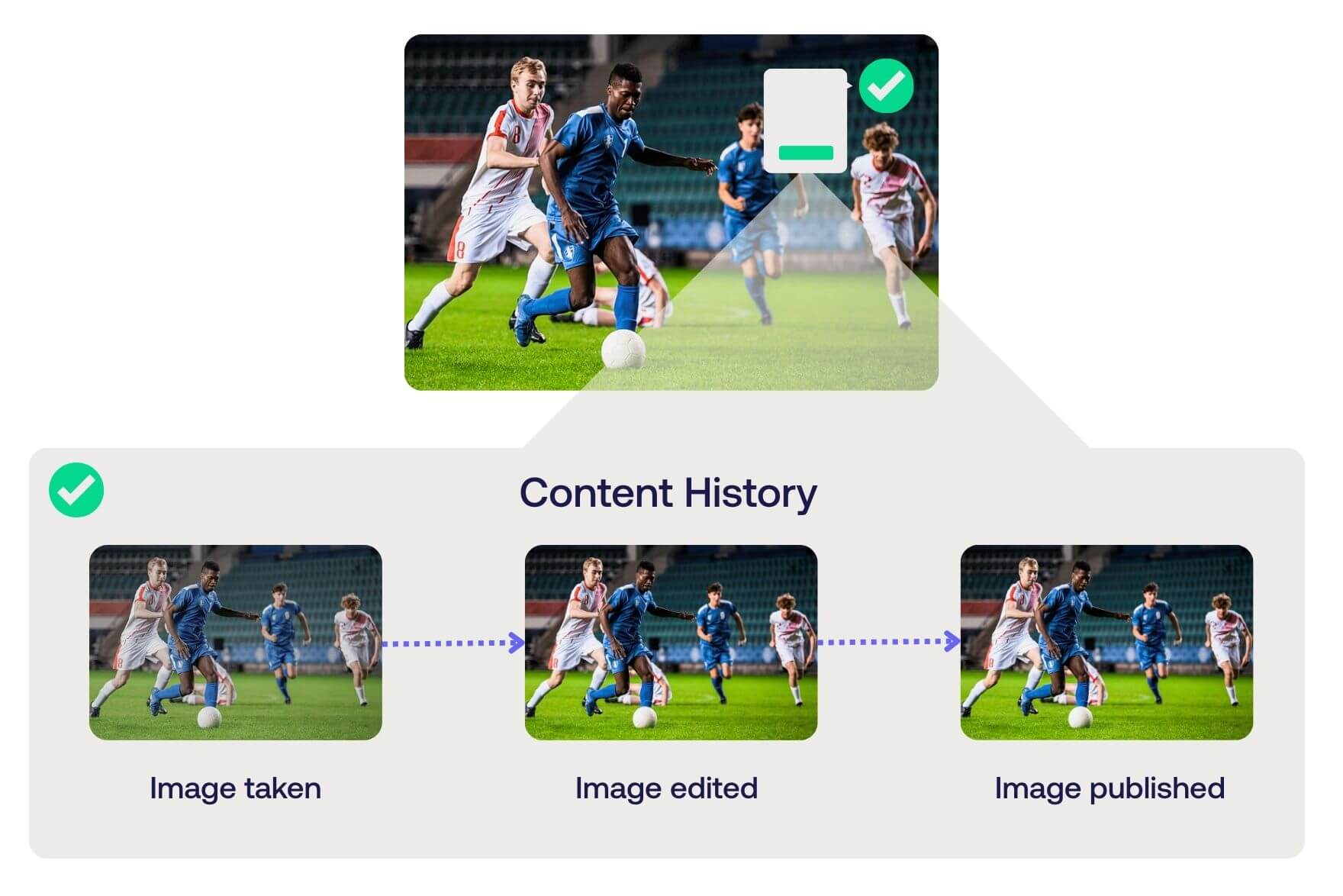

Content Authenticity refers to the ability to verify that a piece of digital content, such as an image, video, article, or audio file, is original, unaltered, and traceable to a trustworthy source. In simple terms, it means knowing where content came from, how it was created, whether it has been modified and what has been modified.

As misinformation and manipulated media become more common online, Content Authenticity has emerged as a vital concept for maintaining trust and trusted content online. Whether it’s a photo shared on social media, a video used in news reporting, or a document published by a government agency, people need to know they can rely on what they see and hear.

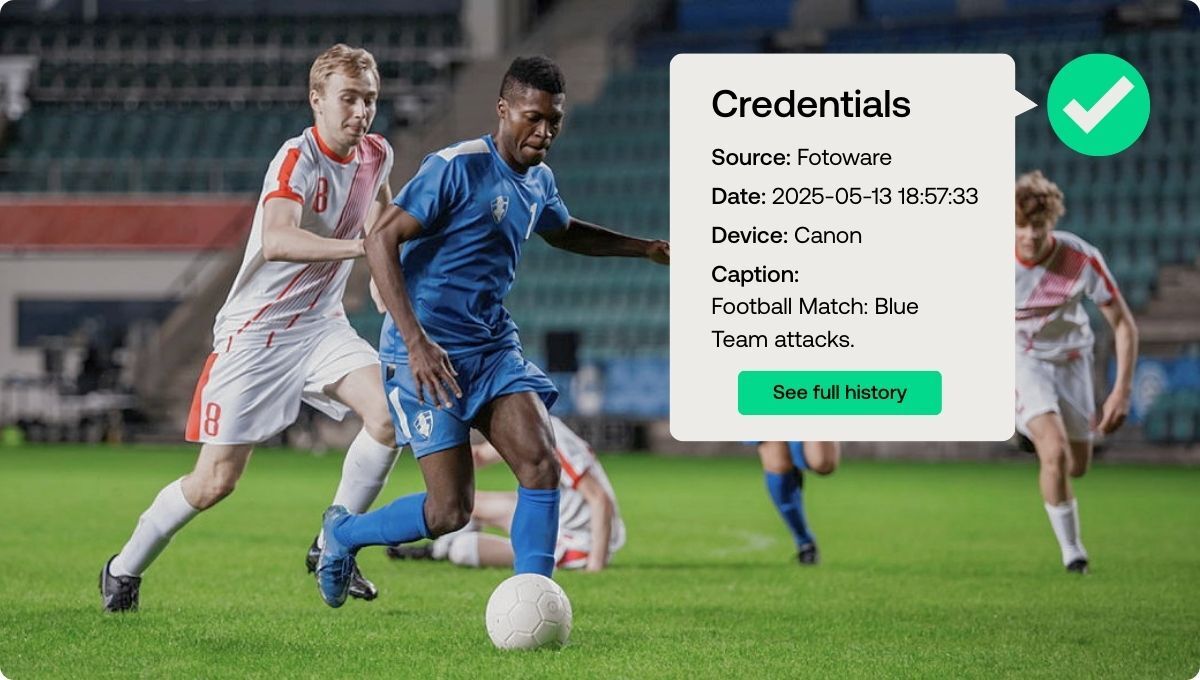

Technology plays a central role in supporting the authenticity of content. Metadata, digital signatures, and emerging frameworks like C2PA (Coalition for Content Provenance and Authenticity) help preserve and verify information about the origin and integrity of digital content. These tools make it easier to identify authentic digital content and detect tampering, especially in an era where AI-generated media is widespread.

Growing importance due to AI-generated content

The rise of generative AI has introduced a new layer of complexity and raised the stakes for digital Content Authenticity. AI can now create realistic images, audio, and video content that mimic reality with astonishing accuracy and at lightning speed.

While these technologies offer creative and commercial potential, they also introduce serious challenges. With hyper-realistic AI-generated content, it’s becoming difficult to distinguish fact from fabrication, and audiences need a way to identify what is real and what is synthetic.

To counter this, tools are being developed to help identify what has been generated by AI, what has been edited, and what remains unchanged, allowing users to make better informed judgments about the content they consume.

Why Content Authenticity matters

The question of whether content is authentic directly affects how people engage with information, form opinions, and make decisions. Content Authenticity matters because it shapes trust, protects reputations, and helps society function in a fact-based, transparent way.

Without clear markers of authenticity, even genuine content may be met with doubt. This so-called “liar’s dividend” means that the mere existence of deepfakes and AI fakes can cause people to distrust authentic media.

Trust in media and journalism

News organizations play a critical role in informing the public, especially during elections, conflicts, or public health crises. However, trust in journalism has declined in recent years, partly due to the spread of false or misleading information. When audiences cannot verify whether a photo is genuine or whether a quote has been altered, skepticism grows.

Signaling the authenticity of the content helps restore that trust. Journalists and news outlets demonstrate transparency and accountability by verifying the source, history, and integrity of the media they publish. Provenance metadata, such as when and where a photo was taken or whether it was digitally altered, becomes an essential tool in rebuilding confidence in trustworthy reporting.

Misinformation and deepfakes

Misinformation spreads quickly online, especially when shared on social media platforms without context. Increasingly, this content is not just false but visually or audibly deceptive. Deepfakes and other forms of manipulated media can make it appear as if someone said or did something they never did.

These technologies make misinformation more convincing and harder to detect. Deepfakes have been used to impersonate politicians, CEOs, and even ordinary people, creating confusion and sometimes leading to real-world consequences. Without clear standards for Content Authenticity, it becomes difficult to separate truth from fiction.

Impact on public perception

The effects of inauthentic content are far-reaching. For individuals, exposure to misleading or content manipulation can distort public perception and undermine informed decision-making. For brands and businesses, the misuse or unauthorized editing of digital assets can damage credibility, especially when photos or videos are taken out of context or falsely attributed.

The stakes are even higher when it comes to democratic processes. Disinformation campaigns that use fake images, altered videos, or fabricated articles can influence elections, incite unrest, and erode trust in institutions. By contrast, authentic content that is verifiable and transparent strengthens public discourse and supports civic engagement.

Challenges in proving Content Authenticity

Although the need is clear, verifying digital Content Authenticity presents several challenges. One of the biggest issues is how easily digital files can be edited or manipulated. Image and video editing tools are widely accessible, and social media platforms often strip metadata from uploaded content, removing critical context like timestamps or location data.

The emergence of generative AI tools complicates the matter further. Synthetic media, including AI-generated text, audio, and visuals, are now nearly indistinguishable from authentic content. Traditional verification methods, such as reverse image search or metadata inspection, are no longer enough on their own.

Finally, even when content verification is possible, it’s a major hurdle for newsrooms, governments, and content-heavy organizations to perform at scale.

However, even though tools, standards, and technology are emerging to assist with determining digital providence, there is always a chance that this information can be tampered with, as well.

The ultimate responsibility for determining what to trust lies with the consumer.

Content Authenticity framework and standards

To address these challenges, several global initiatives have developed frameworks and standards to verify content provenance. At the core of these efforts is the Coalition for Content Provenance and Authenticity (C2PA) which defines an open technical standard for embedding metadata into digital content. This standard makes it possible to capture and preserve details about origin, creation, and modifications in a way that can be verified. Information such as the creator’s name, tools used, editing history, and time and place of publication can be cryptographically signed, ensuring tamper resistance and traceability.

Content Authenticity Initiative

The C2PA standard is supported by major industry players and initiatives. The Content Authenticity Initiative (CAI), launched by Adobe and joined by hundreds of media, tech, and creative partners, advocates for greater transparency in digital media.

Project Origin

Project Origin, driven by leading news organizations like the BBC and The New York Times, brings a media-first perspective to tackling misinformation.

IPTC

The International Press Telecommunications Council (IPTC) ensures that C2PA integrates smoothly with existing metadata standards already used across publishing and media workflows.

Content Credentials

A practical implementation of these efforts are the Content Credentials, which apply the C2PA standard directly in tools like Photoshop and Lightroom. With Content Credentials enabled, creators can automatically attach secure metadata that records authorship, edits, and whether AI tools were used.

Tools and technologies for verifying content

Technology plays a central role in verifying and maintaining the authenticity of content. A combination of metadata standards, content signing protocols, blockchain technology and AI-driven tools make it possible to trace content back to its origin, detect tampering, and assess whether media has been synthetically generated. Below are some of the key technologies helping organizations and individuals verify content.

AI detectors

As generative AI tools become increasingly accessible, so has generative AI detection software that is designed to identify whether an image, video, or audio file has been generated or altered using artificial intelligence. These detectors analyze attributes of a file that are characteristic of AI-generated media.

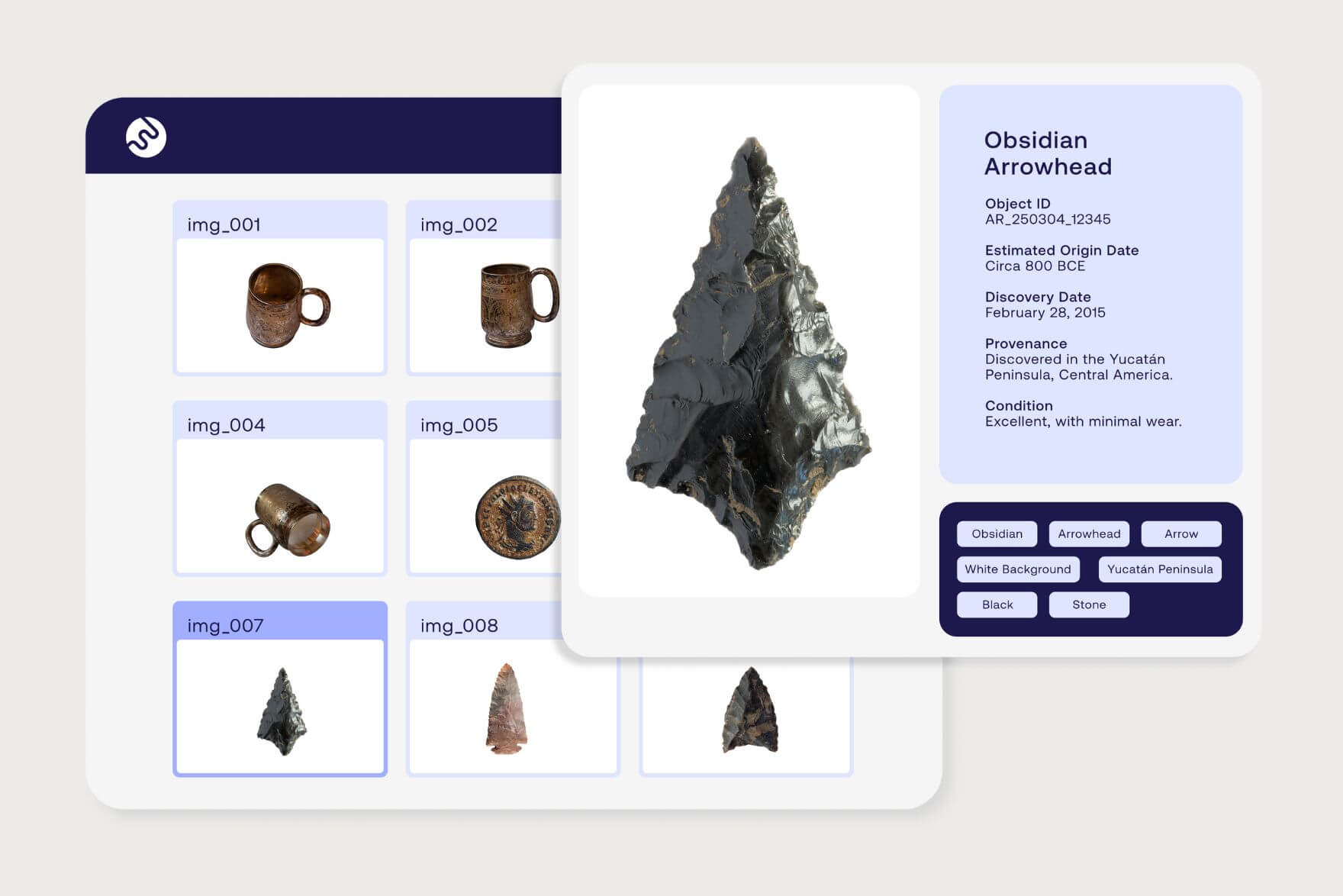

Metadata

Metadata refers to the descriptive information embedded in a file that reveals details about its creation and history. When preserved correctly, metadata can help confirm that a photo or video is original and unchanged. Many organizations turn to Digital Asset Management (DAM) systems that retain and protect metadata throughout the content lifecycle.

Digital watermarking

Digital watermarking techniques embed information into images or videos. These watermarks persist even when the file is resized, cropped, or re-encoded, providing a more resilient way to prove authorship and detect tampering. Some watermarking systems are visible, helping viewers identify official content, while others are hidden but traceable using detection tools.

Digital signatures

Digital signatures use cryptographic techniques to ensure that content has not been altered since it was signed. Through the application of a unique, verifiable signature to digital content at the point of creation or editing, organizations can establish a secure record of authenticity. This makes tampering detectable and helps users confirm that the content came from a trusted source.

Read more: Content verification - A project for photo authenticity in journalism

Blockchain

Blockchain technology offers a decentralized and tamper-proof way to record the history and movement of digital content. This approach is especially useful for content provenance, where verifying the source and chain of edits is critical.

Content Authenticity in journalism and the news

Few industries rely more heavily on authenticity than journalism. News organizations must be able to prove that their photos, videos, and stories are accurate and have not been manipulated. This is especially critical in conflict reporting, elections, and breaking news situations.

Some publishers have begun implementing proof-of-concept projects using standards like C2PA to verify the provenance of content before publication. For example, Reuters has explored secure metadata and signed attribution to establish the authenticity of images in its reporting. These efforts not only protect the integrity of the newsroom but also build trust with audiences.

As these standards gain traction, we can expect more publishers to adopt tools that verify content history as part of their editorial workflows.

The future of authentic content

As digital content becomes more decentralized, faster to create, and harder to verify, the need for authenticity will only grow. Standards like C2PA, combined with verification tools and responsible content practices, will play a central role in maintaining trust online.

Ultimately, Content Authenticity is a foundation for digital trust, enabling journalists, governments, educators, and brands to communicate confidently and transparently. Those who invest in authenticity now will be better prepared for a future where content trust is both a challenge and a competitive advantage.